SRI International

Technical Report |

|

Addendum

Conficker C P2P Protocol and Implementation

Phillip Porras, Hassen Saidi, and Vinod Yegneswaran

http://mtc.sri.com/Conficker/P2P/

Release Date: 21 September 2009

Last Update: 21

September

2009

|

|

Computer

Science Laboratory

SRI

International

333

Ravenswood

Avenue

Menlo Park CA 94025 USA |

|

|

ABSTRACT

This report presents a reverse

engineering of the obfuscated binary code

image of the Conficker C peer-to-peer (P2P) service, captured on 5

March 2009 (UTC). The P2P service implements the functions necessary

to bootstrap an infected host into the Conficker P2P network through

scan-based peer discovery, and allows peers to share and spawn new

binary logic directly into the currently running Conficker C process.

Conficker's P2P logic and implementation are dissected

and presented in source code form. The report documents its thread

architecture, presents the P2P message structure and exchange

protocol, and describes the major functional elements of this module.

|

1. Introduction

While much has been written of the inner workings of Conficker C, and

its predecessors, details have remained scant regarding the structure

and logic of C's most significant new feature, its peer-to-peer (P2P)

module. One likely reason for this is that decompilation of the P2P

module is hindered by various binary transformations that obfuscate

its logic, making disassembly and interpretation more involved. In

fact, the P2P module of Conficker C stands uniquely apart as the only

binary code segment so far in the Conficker family that has undergone

substantial structural obfuscation to thwart reverse engineering.

The introduction of the P2P protocol in

C is a rather important

addition to what is already a large-scale menace to the

Internet.

Conficker's new peer network frees its drones from their reliance on

the domain generation algorithm (DGA) for locating and pulling new

signed binaries. This new peer network was critically needed, as

whitehats have been blocking Conficker's DGA-generated domains from

public registration since February 2009. While Conficker's authors

were able to overcome these registration blocking efforts for a few

Conficker B DGA sites, allowing many B hosts to upgrade to Conficker

C, to our knowledge Conficker A machines have thus far been blocked

from upgrading.

Nevertheless, even with its incomplete

upgrade to Conficker C, this

new peer network has already proven useful. It was recently used

to distribute malicious binaries to Conficker C hosts [1] .

Unfortunately, unlike the binary delivery disruptions over the DGA

rendezvous

points that were achieved by the Conficker Working Group [2],

whitehats currently employ no equivalent

capability to hinder binary distributions

through Conficker's peer network.

One unusual aspect of the P2P scanning

algorithm is that it searches

for new peers by scanning the entire Internet address space. This

choice is unusual, as C infected hosts are currently overlays of

Conficker B hosts. Conficker A and B both share a common flaw in their

scanning logic, which prevents them from scanning all but one

quarter of the Internet address space [3].

Why

then does Conficker's P2P module probe the entire Internet when the A

and B scanning flaw significantly reduces the probability that

C-infected hosts will reside in all but one quarter of those P2P

probes? One explanation could be that the authors were simply

unaware of the scanning flaw. Another could be that it is also looking

for hosts that spread through local scanning or USB propagations.

Despite this mismatch in probing

schemes, we believe the P2P module is a customized library developed

specifically for Conficker. We further speculate that this module is

most likely not coded by the same developers who coded the other major

components of Conficker C. The narrowness of function does not

suggest that it has been adapted from a broader P2P sharing algorithms

(such as from a file sharing algorithm or other rich P2P application).

Conficker's P2P module stands self-contained as a C++ library, whereas

the rest of the Conficker C codebase is written in C. The coding

style is less sophisticated and less rich with functionality relative

to that of other Conficker modules. The P2P module provides a limited

peer command set, keeping complexity to a minimum - perhaps due to

scheduling pressures and quality control concerns in deploying new

functionality across millions of geographically dispersed victim

machines.

Indeed, the simplicity of the P2P

protocol does appear to limit the

opportunity for exploitable mistakes. Overall, the P2P protocol

operates as a simple binary exchange protocol, where peers connect

with each other for the purpose of synchronizing to the

binary payload signed with the highest version number. In this sense,

the Conficker peer network incorporates the notion of a global highest

binary payload version. The security for this protocol relies

entirely on the use of an encryption and hash scheme that has already

been vetted and deployed by the DGA protocol of Conficker.

Both the header (through replication) and the binary payload are

digitally signed, and are then cryptographically verified by the

receiver. Although the protocol does not support complex data exchanges

or network-wide synchronizations, such tasks remain easily achievable

by

simply encoding such logic into signed Windows binary logic and seeding

such binaries into the peer network.

In another design decision, the

Conficker authors selected to spawn

the binary payload as a thread to the Conficker process, rather than

fork binary payloads as independent processes. One implication of

this decision is that, as a thread, the executable payload is granted

direct access to the currently running Conficker C threads, data

structures, memory buffers, and registries. Thus, a downloaded binary

can hot patch critical data

within a live C process, such as peer

lists, the DGA's top level domains (TLD) arrays, and other variables

or seed data.

It also appears that the P2P service is

intended to supersede

Conficker's DGA service as the preferred method for distributing

binaries to C drones. In Conficker A and B, the DGA algorithm is

executed on each drone unconditionally. In Conficker C, the DGA

executes only under the following condition: the P2P service spawning

thread P2P_Main

reports that it was unable to spawn the appropriate

P2P threads, or there does not

exists a binary payload within

the P2P server directory that is available for sharing. In C, the DGA

can clearly supplement the P2P service in locating and retrieving

binary payloads. However, when a binary payload is retrieved and made

available for propagation through the P2P service, C's DGA function

will cease to operate. This suppression of the DGA will continue as

long as the binary payload has not expired and been removed by the P2P

server.

In this report, we examine details of

the underlying Conficker C P2P

logic and its software structure, and review its P2P protocol.

Significant work in understanding the P2P protocol has already been

undertaken by members of the Conficker Working

Group [4, 5]. In

this report, we present the

full

reversal of the obfuscated binary, back to its human-readable C source

code. We begin in Section 2, where we explain

which

obfuscation strategies were used by the Conficker developers to thwart

reverse engineering attempts, such as that documented in this report.

We outline the techniques that were applied to undo these

obfuscations. Section 3 presents an

overview of the P2P

module's thread architecture, its calling structure, and its

initialization steps. Section 4 presents

an

overview

of the protocol message exchange rules.

Sections 5, Section

6,

and Section 7 offer a description of

the implementation of

simple dialog exchanges that occur between peers, and

Section 8 reveals the details

of binary

payload management. Our analysis concludes with an appendix that

provides the decompiled C-like implementation of the full Conficker

P2P code base.

2. Overcoming Conficker's

Binary Obfuscation

Before we begin the presentation of the P2P service, we summarize the

techniques employed by the Conficker authors to hinder reverse

engineering of this module. We also present the techniques we

used to overcome these obfuscations.

Each generation of Conficker has incorporated techniques such as

dual-layer packing, encryption, and anti- debugging logic to hinder

efforts to reverse its internal binary logic. Conficker C further

extends these efforts by providing an additional layer of cloaking to

its newly introduced P2P module.

2.1 P2P Module Obfuscations

The binary code segment that embodies the P2P module has undergone

multiple layers of restructuring and binary transformations in an

effort to substantially hinder its reverse engineering. These

techniques have proven highly effective in thwarting the successful use

of commonly used dissassemblers, decompilers, and code analysis

routines employed by the malware analysis community. In

particular, three primary transformations were performed on the P2P

module's code segment:

API Call Obfuscation -

Conficker employs a common obfuscation technique, in which

library references and

API calls are not imported and

called. Rather, they are often replaced with indirect calls

through registers in a manner that hides direct insight into which

libraries and APIs are used within a segment. As API and library

call analyses are critical for understanding the semantics of

functions, loss of these references poses a significant problem to code

interpretation.

Control Flow Obfuscation

- The control flow of Conficker's P2P module has been

significantly obfuscated to

hinder its disassembly and

decompilation. Specifically, the contents of code blocks from

each subroutine have been extracted and relocated throughout different

portions of the executable. These different blocks (or chunks)

are then referenced through unconditional and conditional jump

instructions. In effect, the logical control flow of the P2P

module has been obscured (spaghettied) to a degree that the module

cannot be decompiled into coherent C-like code, which typically drives

more in-depth and accurate code interpretation.

Calling Convention Obfuscation -

Decompilers depend on their ability to recognize compilation convention

such as function epilogues and

prologues. Such segments help the decompiler interpret key

information, such as calling conventions for each subroutine, which in

turn enable the decompiler to interpret the proper number of function

arguments and local variables. Unfortunately, the P2P module has been

transformed to disrupt such interpretations. Each subroutine has

been translated, such that some parameters are passed through the

stack using push instructions, while others are passed by registers,

and in unpredictable order. In effect, these transforms utterly

confuse decompilation attempts, generating inaccurate function argument

and local variable lists per subroutine. In the presence of such

errors, code interpretation is nearly futile.

2.2. Systematic Obfuscation

Reversal

As part of our effort to understand Conficker, we have been driven to

develop new techniques to deal with all the above-mentioned

obfuscations. To date, we have been able to systematically undo

all the binary transformations in the P2P module, and have produced a

full decompilation of this module to a degree that approximates its

original implementation. Furthermore, we are in the process of

generalizing and automating the deobfuscation logic developed for

Conficker. Here, we briefly summarize our deobfuscation

strategies.

2.2.1 Using API Resolution to

Deobfuscate API Calls

The P2P module employs 88 Windows API calls, all of which have

undergone API call obfuscation. Rather than invoke APIs directly,

a global array is initialized with all API addresses. Calls are

then made via registers loaded with address references from the global

array, rather than via direct jump. In the presence of such

transformations, we must largely rely on dynamic analysis to derive the

targets of each obfuscated jump or call instruction. However,

here we extend our analysis by employing a

type inference analysis that

enables us to infer which Windows API call is employed through an

analysis of parameter types placed on the stack.

A typical Windows XP installation contains more than a thousand

dynamically linked libraries (DLLs), many of which may contain hundreds

of exported functions. Fortunately, a running executable

typically loads only a few DLL files and imports a few additional

static libraries. This dynamic load and import list provides a

first-tier constraint on the possible APIs invoked for a given

executable. Using the loaded/import lists, we apply the following

analysis to each unidentified call and jump target within the

executable. We first analyze the number of elements pushed onto

the stack prior to reaching the call site. This allows us to determine

the number of arguments for the target function. The number of

arguments represents a second constraint on the target API. We

then apply dataflow analysis to trace how the return values of the

unidentified target functions are related to arguments of other

unidentified functions. These return value mappings represent our third

constraint. Finally, the union of these constraints is matched

against our derived

type signatures

of all candidate Windows APIs.

To date, we have computed a database of API type signatures, containing

all known and documented Windows APIs: over fifty thousand APIs

provided by Microsoft, as well as the finite set of all constant values

that some parameters can hold. We have developed an automated

constraint satisfaction (CS) engine for malware analysis, which takes a

set of constraints generated by any number of instructions, along with

the constraints about function calls and their arguments.

Using our CS engine and this general approach, we can recover all jump

targets, their arguments and return values, all of which are critical

to understanding program semantics. Furthermore, as we propagate this

information through the program structure, we can identify user-defined

variables and structures with similar types.

2.2.2 Automated Import Table

Reconstruction

Once the obfuscated APIs have been identified, we restructure the

binary to reconstruct an import table for use in decompilation. We use

an automated import table reconstruction algorithm that replaces all

obfuscated calls with proper references to their corresponding Windows

API. This is done by building a new import table and redirecting

all calls to obfuscated APIs to the corresponding entry in the import

table.

Consider the following obfuscated invocation of a Windows API, which

appears within Conficker C:

loc_9AD177:

mov

[ebp+var_4], 0FFFFFFFFh

push

dword_9BCB74

pop

edx

push

dword ptr [edx]

pop

eax

push

0

call

dword ptr [eax+60h]

Our import reconstruction algorithm automatically replaces this code

segment with a properly deobfuscated call, in this case, to

ExitThread:

loc_9AD177:

mov

[ebp+var_4], 0FFFFFFFFh

push

dword_9BCB74

pop

edx

push

dword ptr [edx]

pop

eax

push

0

; dwExitCode

call

ds:ExitThread

This capability not only enables the deobfuscated code to be properly

disassembled and decompiled, but also allows our resulting code to be

instrumented and dynamically executed, if needed.

2.2.3 Automated Code Restructuring

Code chunking and random relocation is the next challenge that must be

overcome. For example, consider the following disassembly of the

Conficker function that tests whether a candidate IP address (for use

by the P2P scan

routine) corresponds to a private subnet:

.text:009B327C

.text:009B327C

OBFUSCATED_VERSION_OF_is_private_subnet

proc near

.text:009B327C

mov ecx, eax

.text:009B327E

and ecx, 0FFFFh

.text:009B3284

cmp ecx, 0A8C0h

.text:009B328A

jz loc_9B4264

.text:009B3290

jmp off_9BAAA5

/*

.text:009BAAA5 off_9BAAA5 dd offset

loc_9AB10C */

.text:009B3290

OBFUSCATED_VERSION_OF_is_private_subnet

endp

.

.text:009B4264

loc_9B4264:

.text:009B4264

.text:009B4264

mov eax, 1

.text:009B4269

retn

.

.text:009AB10C

cmp al, 0Ah

.text:009AB10E

jz loc_9B4264

.text:009AB114

jmp off_9BA137

/*

.text:009BA137 off_9BA137 dd offset

loc_9B2CE4 */

.

.text:009B2CE4

and eax, 0F0FFh

.text:009B2CE9

cmp eax, 10ACh

.text:009B2CEE

jz loc_9B4264

.text:009B2CF4

jmp off_9BA3CC

/*

.text:009BA3CC off_9BA3CC dd offset

loc_9ABA24 */

.

.text:009ABA24

xor eax, eax

.text:009ABA26

retn

This function has been split into five different instruction blocks.

The blocks were moved from a contiguous memory space to different

memory locations within the image of the Conficker code. For each

unconditional jump instruction, we provide the memory location where

the jump target is stored. Such obfuscations can pose a

significant barrier for reverse engineering and decompilation tools.

To solve this problem, we have developed a dechunking algorithm that

systematically rewrites all such functions back to their original

form. The

is_private_subnet

function code after deobfuscation is

.text:009A1311 is_private_subnet proc near ;

CODE XREF: sub_9AB1A0+13A

.text:009A1311

mov ecx, eax

.text:009A1313

and ecx, 0FFFFh

.text:009A1319

cmp ecx, 0A8C0h

.text:009A131F

jz loc_9A1340

.text:009A1325

cmp al, 0Ah

.text:009A1327

jz loc_9A1340

.text:009A132D

and eax, 0F0FFh

.text:009A1332

cmp eax, 10ACh

.text:009A1337

jz loc_9A1340

.text:009A133D

xor eax, eax

.text:009A133F

retn

.text:009A1340

;

-------------------------------------------------------

.text:009A1340

.text:009A1340

loc_9A1340:

; CODE XREF: sub_9A1311+E

.text:009A1340

; sub_9A1311+16 ...

.text:009A1340

mov eax, 1

.text:009A1345

retn

.text:009A1345

is_private_subnet endp

its decompilation (to C-like syntax) is

bool

__usercall is_private_subnet<eax>(unsigned __int16

a1<ax>)

{

return

a1 == 43200 || (_BYTE)a1 == 10 || (unsigned __int16)(a1 & 0xF0FF)

== 4268;

}

2.2.4 Automated Standardization of

Calling Conventions

Compilers often depend on the recognition of standard calling

conventions in order to interpret the parameters and return values of

functions. Such calling conventions typically involve the

use of the stack or special registers to pass arguments to functions.

Decompilers and disassemblers can recognize the patterns of x86 machine

instructions that correspond to each calling convention, and use this

insight to determine the number of arguments for each function.

This represents a powerful technique for enabling dataflow analysis

across functions, and is critical for reversing program semantics from

binaries. In the Conficker analysis, three calling

conventions are of particular interest:

CDECL is the standard

calling convention for Windows APIs. This convention is

used by many C systems for the

x86 architecture. In the CDECL

convention, function parameters are pushed onto the stack in a

right-to-left order. Function return values are returned in

the EAX register. Registers EAX, ECX, and EDX are available for

use in the function.

Fast indicates

that the arguments should be placed in registers, rather than on the

stack, whenever possible.

This reduces the cost of a function

call, because operations with registers are faster than with the stack.

The first two function arguments that require 32 bits or less are

placed into registers ECX and EDX. The rest of them are pushed on the

stack from right to left. Arguments are popped from the stack by the

called function.

This is

the default convention for calling member functions of C++

classes. Arguments are passed from right

to left and placed on the stack, and this is placed in ECX. Stack

cleanup is performed by the called function.

Conficker's P2P code contains an additional layer of obfuscation, in

which each function has been rewritten to a user-defined calling

convention, and where some of the arguments are pushed onto the stack

and others are written to registers that are not associated with

standard calling conventions. This obfuscation is significant, in

that decompilation will fail to recognize the actual number of

arguments of a function. The resulting source code derived

from the decompiler incorporates fundamental misinterpretations that

hinder semantic analyses. In

Source Listing 1

we first provide an example of

such a mangled subroutine followed by a version of the subroutine that

is restored by our system.

3. The Conficker P2P Software Architecture

The use of P2P protocols by malware

has been a well-established practice in performing such tasks as

anonymous command and control, distributing spam delivery instructions,

and sharing new binaries [

11,

12,

8]. Conficker, too, now incorporates a P2P

protocol, but employs this protocol for the rather narrow function of

upgrading all peers in the peer network to the binary with the highest

associated version ID.

Briefly, the P2P protocol allows each

pair of connected peers to conduct a binary version comparison with one

another. On the establishment of a connection, the client

(connection initiator) sends the server its current binary

version. If the server finds that its own binary version is

greater than that of its peer, it immediately forwards its binary to

the client. If the server finds that the peer has a higher

version number, it asks the client to forward its binary. When

both versions are equal, the connection is completed.

In the case of binary exchange, the

receiver performs a cryptographic validation of the binary similar to

that which occurs via Conficker's DGA-based rendezvous point

downloads [

6,

7]. However,

the P2P module employs a separate set of cryptographic keys than that

used by the DGA downloader thread. Once received via peer, a

binary is cryptographically validated, and if acceptable it may be

stored or spawned as a thread, which is attached to the resident

Conficker C process. Binary download management represents one of

the more complex and interesting aspects of the Conficker's P2P

service, the details of which are discussed in

Section 8. The newly acquired binary

payload, when stored, becomes the current version used during the peer

binary exchange procedure. In effect, both peers synchronize on

the latest binary payload version, and then iteratively

propagates this version to all peers (with lower versions) whom they

subsequently encounter.

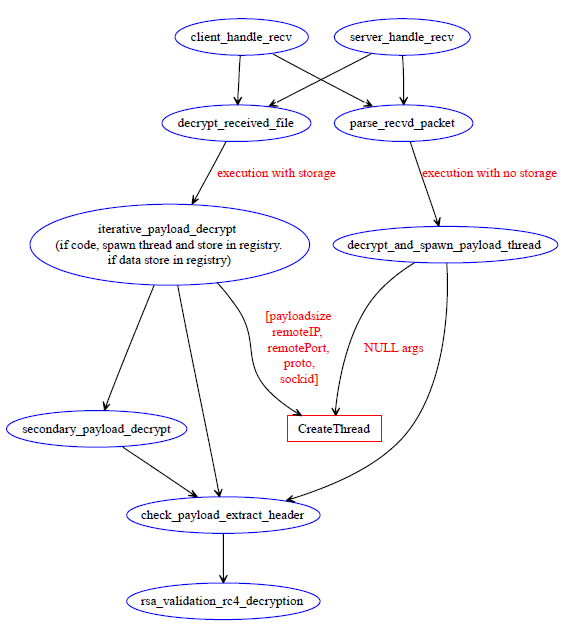

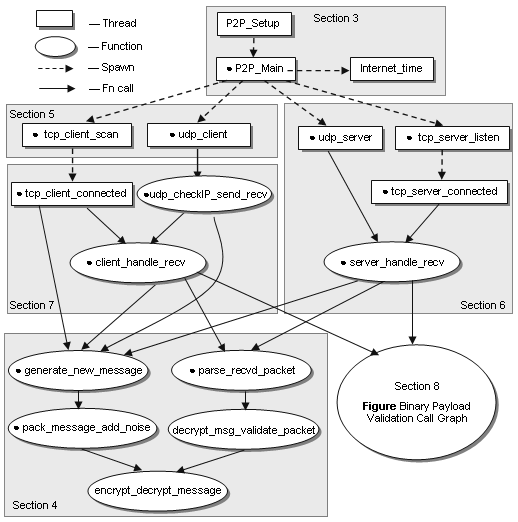

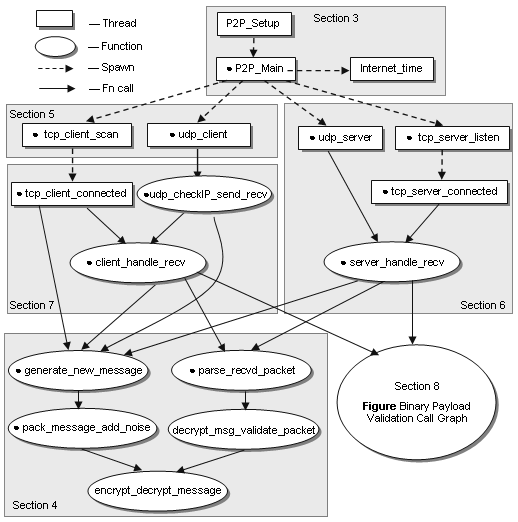

Figure

1: P2P module thread and functional call architecture

Figure 1 presents a call graph overview of the

thread architecture and the major subroutines used by the Conficker P2P

module. The figure is composed of rectangles, which represent the

threads of the P2P module, and ovals to represent the major subroutines

called by each thread. The figure is a simplification of the

software call graph. All tasks are represented, but some subroutines

are not shown for

readability. The dashed arrows indicate the thread spawning

graph, while solid arrows represent the subroutine invocation

graph. Figure 1 also indicates which

sections in

this document present the description of which threads. Finally, the

dotted label in some threads and subroutines indicates which module's

source code is presented in the associated section.

Initialization and establishment of the P2P service occurs in three

separate locations within the Conficker binary.

3.1

Conficker Main Process Initialization Routines

While Conficker C's P2P service is largely implemented as a

self-contained independent code module, a few important initialization

steps are embedded in C's main initialization routines. These

initialization steps include the establishment (and cleanup across boot

cycles) of secondary storage locations used by the P2P service for

managing temporary files. Prior to P2P service setup,

Conficker initializes a directory in the Windows (OS dependent) default

temporary file directory, under the name

C:\...\Temp\{%08X-%04X-%04X-%04X-%08X%04X}.

This directory is later used by the P2P service to store downloaded

payload, and as writing space for storing other

information. If the directory exists, this subroutine

clears all 64 temporary files. It also initializes all global

variables that are used to track all temporary files, record download

timestamps, and track version information among downloaded files.

Conficker C's main initialization routines dynamically load the

Microsoft cryptographic library functions, which are used by the P2P

service in IP target generation, temporary file name creation,

cryptographic functions, and for random number selection. C also

initializes the key API in-memory import table, which contains the list

of obfuscated APIs used by the P2P service. This import table is

effectively used to implement API call obfuscation, a common technique

used to hinder process tracking and control flow analysis (as discussed

in Section 2).

3.2 The P2P_Setup Thread

This thread initializes all system state necessary to manage the P2P

service, and then spawns the main thread that in turn starts the P2P

service. This thread initializes 64 temporary files that may be

used by the P2P client and server to store parallel peer downloads. P2P_Setup

conducts cryptographic validation of any binary payload stored in the

P2P server directory, which may linger across process reboots.

This thread performs P2P registry initialization, used for peer list

management. P2P_Setup

creates a registry entry that corresponds to C's scratch directory:

HKLM\Software\Microsoft\Windows\CurrentVersion\Explorer\{%08X-%04X-%04X-%04X-%08X%04X}

P2P_Setup

incorporates an anti-debugging code segment that will cause debuggers

to exit this process with exceptions. Finally, once all P2P

service state initializations are performed, this threads spawns P2P_Main,

which activates the P2P service.

3.3 P2P Main Thread and

Initialization

P2P_Main

is the key thread used to activate the P2P service. Its function

is to interrogate the host for network interfaces and their

corresponding local address ranges. It then spawns the main

thread that starts the P2P service across all valid network interfaces.

P2P_Main

is presented in source code form in Source

Listing 2.

Network Interface Selection:

This involves the

establishment of the interface list as shown in

Source Listing 3. This

subroutine is iterated per

interface, and includes several checks to ensure that the IP address

for the interface is legitimate and routable. The main loop

(shown in

Source Listing 2),

executes queries (every 5 seconds) and starts

server threads on as many as 32 interfaces. We refer to

interfaces

that are found to be legitimate and routable as

validated interfaces.

Thread Management:

On each validated

interface, two TCP and two UDP server threads are spawned,

respectively.

In

Figure 1, the dotted lines indicate which

threads are spawned by

P2P_Main.

In all,

P2P_Main

spawns eight (or more) threads, depending on the number of interfaces

in the system. These include two TCP client threads, two UDP

client

threads, two TCP server threads (per interface) and two UDP server

threads (per interface). For both the TCP and UDP servers, one

thread

listens on a fixed port and another thread listens on a variable port

whose value is based on the epoch week (thus the need for global

Internet time synchronization among all peers,

Section 3.4). If the epoch week has

rolled over, then

the variable TCP and UDP server threads on each interface are closed

and restarted.

3.4 Peer Network Time Synchronization

One thread that plays an important

role in the P2P algorithm is Internet_time,

which is spawned by P2P_Main.

As discussed later, peer discovery within the P2P protocol depends on

epoch week synchronization among all peers. This synchronization

is achieved via the probing of HTML response headers produced from

periodic connections to well-known Internet sites.

The Internet_time

thread incorporates a set of embedded domain names, from which it

selects a subset of multiple entries from this list. It performs

DNS lookups of this subset list, and it filters each returned IP

address against the same list of blacklist IP address ranges used by

the C's DGA. If the IP does not match the blacklist, C connects

to the site's port 80/TCP, and sends an empty URL GET header request.

In response, the site returns a standard URL header that incorporates a

date and time stamp, which is then parsed and used to synchronize local

system time. The following web sites are consulted by C's

Internet time check:

[4shared.com,

adobe.com, allegro.pl, ameblo.jp, answers.com, aweber.com, badongo.

com, baidu.com, bbc.co.uk, blogfa.com, clicksor.com, comcast.net,

cricinfo.com, disney.go.com, ebay.co.uk, facebook.com, fastclick.com,

friendster.com, imdb.com, megaporn.com, megaupload.com, miniclip.com,

mininova.org, ning.com, photobucket. com, rapidshare.com,

reference.com, seznam.cz, soso.com, studiverzeichnis.com, tianya.cn,

torrentz.com, tribalfusion.com, tube8.-com, tuenti.com, typepad.com,

ucoz.ru, veoh.com, vkontakte.ru, wikimedia.org, wordpress.com,

xnxx.com, yahoo. com, youtube.com]

3.5 Thread Architecture

The following is a brief summary of

all threads in Conficker C's P2P module, their spawning sequence, and

forward references to where each is discussed.

- P2P_Setup

(Section 3): initializes all system state

necessary to manage the P2P service, and then spawns the main thread

that starts the P2P service.

- - spawns P2P_Main

(spawns 1 thread)

- P2P_Main

(Section 3): interrogates the host for network

interfaces and local address ranges, and then spawns the appropriate

thread set to implement the P2P protocol over all valid network

interfaces.

- - spawns Internet_time

- - spawns tcp_client_scan

(spawns 1 local scanner thread)

- - spawns tcp_client_scan

(spawns 1 global scanner thread)

- - spawns udp_client

(spawns 1 local

client thread)

- - spawns udp_client

(spawns 1 global client

thread)

- - spawns tcp_server_listen

(spawns 1 thread per network interface)

- - spawns udp_server

(spawns 1

thread per network interface)

- Internet_time

(Section 3): synchronizes the peer to the

common epoch week used for port selection during peer discovery.

- tcp_client_scan

(Section 5} - Peer Discovery):

conducts local and Internet-wide P2P scanning.

- - spawns tcp_client_connected

(spawns 1 thread per connected peer)

- tcp_server_listen

(Section 6): monitors local network

interfaces on fixed and dynamically computed P2P ports. It spawns

the tcp server connection thread manager when TCP packets are sent to

its P2P ports.

- - spawns tcp_server_connected

(spawns 1 thread per connected peer)

- udp_server

(Section 6): monitors its network

interface on fixed and dynamically computed P2P ports. This thread

manages a UDP-based stateless server and is responsible for tracking

each incoming P2P message over its assigned network interface.

- udp_client

(Section 6 and Section

7): conducts local and Internet-wide P2P scanning and implements

Conficker's client-side P2P protocol.

- tcp_client_connected

(Section 7): called from tcp_client_scan,

this thread implements Conficker's client-side P2P protocol.

4. The P2P Protocol

Our description of Conficker's P2P

protocol and its message format builds on (and corroborates) prior

analyses [

4,

5].

In the presentation of

the P2P network message structure, we reuse field names from these

reports where possible to facilitate comparison. To present the

P2P protocol, we first summarize the P2P message parsing logic and

message structure. We then present the P2P message creation

logic, and conclude by summarizing the P2P message exchange sequence

that occurs between P2P clients and servers.

4.1 Parsing Received P2P Messages

Inbound message processing is handled

by the

parse_recvd_message

subroutine. This subroutine begins by decrypting the message and

validating the checksum. The message decryption function is shown

in

Source Listing 4.

Once the received message is

successfully decrypted, it is decoded using function

build_mesg_structure.

Source Listing 5 presents the source

implementation of the

parse_recvd_packet

and

build_mesg_structure

subroutines.

Source

Listing 5 also presents the internal C representation of the

P2P message structure, which we refer to as

parsed_mesg.

The

parsed_mesg

structure includes the IP and port information

of the peer machine that sent the message. It provides a copy of

the

payload_version

and the

payload_offset.

The

payload_data

field is a complex field with a 4-byte header. The

last_chunk

sub-field is effectively a 8-bit bitfield. The

first (least significant) bit indicates that this packet represents the

final packet (last chunk) of a multipacket message while the second bit

indicates if the file should be immediately executed as a thread

without storage (see

Section 8.5.1). Finally, the size

subfield corresponds to the payload data size.

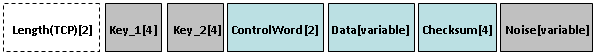

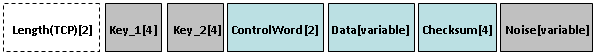

Figure

2: Conficker P2P protocol header

The

network

representation of a

P2P

parse_mesg

structure is illustrated in

Figure 2. In

this figure, the field lengths shown in

brackets are in bytes. Dotted fields are present only in TCP packets

and fields in blue are encrypted using the keys. In this network

representation, the header begins with an optional 2-bytelength

field which is present only in TCP packets. The remainder of the

header is identical for both TCP and UDP. An 8-byte key data field is

used to encrypt the remainder of the message. Finally, a

random-length noise segment is appended to the end of the message,

presumably to thwart length-based packet signatures.

The first byte of the payload_data

field indicates whether this packet is the last

data packet. The last two bytes indicate the length of the

following payload data chunk. Similarly, the peers data table is

a complex field with a 2-byte header that indicates the number of

entries. Each entry is 12 bytes in length, with a 4-byte IP

address and 8-byte bot ID field.

Included in the payload data header

is a 2-byte packet

control

word. The bit fields of the decrypted

control word describe which fields are present in the P2P data section.

Table 1 summarizes of the data fields

corresponding to each bit in the control word and their corresponding

lengths. The length of the message can thus be inferred by

parsing the control word except for the variable length fields of

payload data and

peers data.

Bit

|

Name

|

Description

|

Length

(Bytes)

|

0

|

Role

|

Client=1/Server=0

|

n/a

|

1

|

Local

|

Is Peer in the same subnet? |

n/a

|

2

|

Proto

|

TCP=1/UDP=0 |

n/a

|

3

|

Location

|

External IP address and Port |

6

|

4

|

Payload

Version

|

Version of running payload |

4

|

5

|

Payload

Offset

|

Offset of current payload chunk |

4

|

6

|

Payload Data

|

Payload chunk data &

variable |

variable

|

7

|

Data_X0

|

System summary information? |

26

|

8

|

Peer Data

|

Presence of a table of peer data

& variable |

variable

|

Table

1: P2P control word bit field description

4.2 Generating Parsing P2P

Messages

Source

Listing 6 presents the implementation of the

generate_new_message

subroutine. This function allows clients and servers to produce

new P2P messages. The function begins by computing the P2P

message control word. It fills in the external IP field, the port

field, the payload version field, and the payload offset field.

Finally, it calls

pack_message,

which employs the

encrypt_decrypt_message

to encrypt the payload data of the message prior to transmission. It

also calls

pack_message_add_noise

to construct the full message header and payload, and to append a

variable length random byte segment to the final message.

4.3 The P2P Message Exchange

Sequence

Each Conficker C host is capable of

operating as both client (P2P session initiator) and server.

Details of the server thread implementation are discussed in

Section 6, and the client thread

implementation is discussed in

Section 7.

Here, we discuss the two-way message exchange sequence that occurs

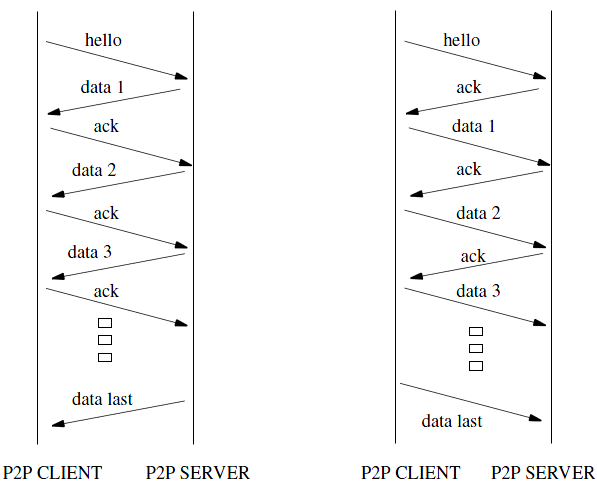

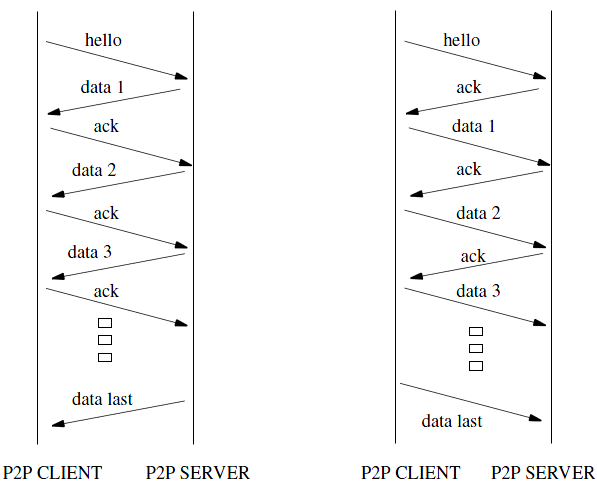

between client and server. To do this, we illustrate the timeline

diagram of a binary update scenario in

Figure 3.

The left panel illustrates a message flow when the P2P binary version

of the server is greater than the client's version. The

right panel illustates a message flow when the P2P binary version of

the client is greater than the server's version.

Figure 3: Conficker P2P binary update timeline diagram

In our example, the remote P2P server has a more recent binary (higher

version ID) than the local P2P client (the connection initiator).

The client begins this scenario by sending a hello message with its

current binary payload version ID. The other payload fields (payload_offset,

payload_length, last_chunk) are all set to 0 in this hello

message.

Next, the server determines that its

payload version is greater than the client's version, and in response

immediately begins transfer of its current high-version binary.

The server responds with its first encrypted payload data

segment. In this protocol, messages may be sent over

multiple packets, with the last_chunk

field set to 0 to indicate that the current packet does not contain the

end of the message.

The client acknowledges receipt of the server's packet by replying with

an ack message. This ack message triggers the release of the next

payload segment from the server. This exchange continues until

the last data segment (a packet with the payload_last_data field

set to 1) is sent from the server. The binary is then

cryptographically validated and executed by the client, and if

successful its binary version ID is updated.

In the second case, where the

client's version is more recent, the server replies to the client's

hello with an ack packet that is devoid of payload data. The

client checks the version number on the ack packet. Finding that

its version number is greater than the server, the client immediately

initiates the uploading of its more recent binary version to the

server. The server replies to these packets with acknowledgments until

the last data packet is transmitted. The payload is then

validated and executed at the server.

5. Peer Discovery Protocol

Conficker's P2P module

does not

incorporate a seed list of peers to help each drone establish itself

in the peer network, as has been a well established practice with other

malware ([

8,

11,

12] provide several

examples). Rather,

Conficker hosts must bootstrap

themselves into the network through Internet-wide address scanning, and

continue this scanning throughout the life of the process. Once a

C drone locates or is located by other Conficker peers, the P2P module

allows it to act as both client or server, respectively.

Peer discovery occurs over both the

TCP and UDP protocols. The thread responsible for TCP peer

scanning is

tcp_client_scan,

shown in Source Listing 7 , which is

spawned from

P2P_Main.

Two copies of this thread are created: one for local scanning and one

for non-local (i.e., Internet) scanning. The local

tcp_client_scan

thread defines local target IP addresses as those within the range of

the current network interface's subnet netmask. This pair of

local and global

tcp_client_scan

threads service all valid network interfaces (

Section

3) within the host.

UDP scans are performed directly by

the

udp_client

thread. Details of the Conficker P2P Client logic are presented in

Section 7. Similar to the TCP thread

logic, two

udp_client

threads are spawned to handle both local

and global network scanning. However, a local and global instance

of this thread is spawned per valid network interface (i.e., if an

infected host has both a valid wired and wireless network interface,

then two pairs of the local/global

udp_client thread

are

created).

Peer discovery involves three

phases: target IP generation,

network scanning, and (in the case of TCP) spawning the

tcp_client_connection

thread when a network peer acknowledges

the scan request. Peer list creation and management

is handled within the tcp and upd client logic, and is therefore

discussed in

Section 7.

Target IP generation for both TCP and

UDP is handled by the subroutine

build_scan_target_ip_list,

Source Listing 8.

This routine relies on the arrays

of random numbers produced during the initialization phase of the P2P

service,

Section 3. A global

counter ensures

that a different list is produced each time the function is

invoked.

Each time

build_scan_target_ip_list

is called, it computes an array of

100 random IP addresses. When called by the local scan thread, it

produces 100 IP addresses within the range of the network interface

subnet mask. When called by the global scan thread, it produces

100 IP addresses based on the following criteria. If the

number of peers in its peerlist is non-zero, then with a small

probability, i.e., (random() % (1000

- 950 *

num_peers / 2048) == 0), it adds one of the existing peers

to

the target list. Otherwise, it pseudo-randomly produces an IP

that passes the following constraints:

- - The IP address is legitimate (not a broadcast or DHCP failure

address).

- - The IP address is not a private subnet address (10.0.0.0/8,

192.168.0.0/16, 172.16/12).

- - The IP address is not found within the Conficker C's

filtered address range (check_IP_is_in_ranges).

Even with a full peerlist of 2048

peers, the probability of adding an existing peer is just 1/50 per

location. We would expect two of

the existing peers to be in the list of 100 IPs. When the peerlist is

just

1, then the probability of picking that peer is 1/1000 per location or

approximately 1/10 per list. The exact value can be expressed as

the following

binomial:

Pr(peer is chosen) =

1 - Pr(none of the 100 picks is the peer) = 1 - (999/1000)  (100) = 0.0952.

(100) = 0.0952.

Once the list of 100 IP targets is

computed, the TCP and UDP scanners enter the scan phase. For each

target IP address, the scanner

must first compute an IP-dependent fixed port, and a variable port that

is

dependent on both the target IP and the current epoch week.

Implementation details

of the Conficker C port generation algorithm are available

at [

9]. Each fixed and variable

port computed is vetted against a port

blacklist. An analysis of the port blacklist check is available

at [

10].

The lists of targeted IPs computed by

TCP and UDP are independent and

not meant to overlap. Each IP targeted by the TCP client scan

thread or UDP

client scan thread receives a probe either on the fixed or variable

port for that destination IP and protocol. The choice between

fixed or variable

port is random. The TCP and UDP scanning logic for both

local and global addresses ranges continuously loops from the

target IP generation phase to build the list of 100 candidates, to the

scan

phase, which probes each targeted IP address over the computed fixed

and variable

ports. Over each iteration, the

udp_client

scanner, pauses for 5000 ms, and

then recomputes the next set of 100 candidate IPs. The

tcp_client_scan,

shown in

Source Listing 7,

includes no such pause logic. When a peer is discovered, the

tcp_client_scan

thread spawns the

tcp_client_connect

thread to establish a peer session,

while the

udp_client

thread handles client communications in the same thread.

When contacted by another peer over

UDP or TCP, Conficker's P2P service can operate in

server mode, via two P2P server

threads:

tcp_server_listen and

upd_server.

A UDP and a TCP server thread are spawned for each valid network

interface found on the host. These server threads listen to

all incoming UDP and TCP connections on two listen ports.

One server listen port is statically computed based on the P2P IP

address assigned to the server thread's network interface. The

other port is dynamically computed based on the IP address and the

current epoch week. The C implementation of this static and

dynamic port computation is presented in [

9].

Both UDP and TCP server threads

operate similarly. The primary differences between these threads

are derived from the stateless versus statefull nature of the protocols

over which they operate. When in server mode, an infected C drone

will interact with the Conficker P2P network and can distribute or

receive digitally signed files to other P2P clients. It can

operate as a binary payload distributor when it has determined that it

has a locally stored binary in its P2P temporary directory, and this

file has been properly cryptographically signed by the Conficker

authors and is unaltered (i.e., properly hashed and signed). It

initiates a binary distribution to its client if it determines that its

current binary payload has a higher version ID than that reported by

the client. It can also ask for the client's local binary

payload, when it determines that the client is operating with a binary

version that is greater than its own.

The top-level

UDP_server

thread

function, illustrated in

Source Listing

9, is straightforward. It begins by binding to the

appropriate local network interface and port pairs, based on the

argument that is passed to it by

P2P_Main.

The subsequent loop waits until a packet is received on this interface

and port, and then invokes the

server_handle_recv

function, which it shares with its TCP counterpart. This function

parses the packet, constructs the P2P message, and returns a reply

message to its client. If the reply message length is non-zero,

the reply message is sent back to the remote client, and the loop

continues waiting for the next message.

Unlike the UDP server thread, a

top-level TCP connection handler called

tcp_server_listen

simply listens for client connection attempts,

Source Listing 10. When a

connection is accepted, a new TCP thread is spawned to handle this new

client. The

tcp_server_connected

thread, shown in

Source

Listing 11, uses the recv system call to wait for messages from the

remote client. Messages received over this port are first checked

to ensure that they conform to the Conficker TCP P2P protocol format

(i.e., the value of the first two bytes corresponds to the length of

message - 2). Once the message is received, it is passed to the

server_handle_recv

function, as in the UDP server case. If the

output_message

returned by the

server_handle_recv

function is of non-zero length, a response is sent back to the remote

client. The outer loop contains a packet counter to ensure that

no more than 2000 TCP packets are exchanged per connection.

Both TCP and UDP servers parse

inbound message using the common subroutine,

server_handle_recv.

This function is illustrated in

Source Listing 12.

It begins by parsing the received message using the

parse_recvd_

packet function, which validates the message content and

populates the P2P message structure (structure pm in the source

listing). It then compares the version number of the software

running locally against the version reportedly possessed by the remote

client. If the local version is more recent (numerically higher) than

the remote client, it then starts sending chunks of the file to the

remote client. The chunk size is between 1024 and 0xC01 + 1024 or

between 512 and 1024, depending on whether the protocol is TCP or UDP,

respectively. The function

generate_new_message

is called, which packs a new message to the client based on this

data. If the remote payload version is more recent and no

payload_data is being sent, a reply message is sent to the remote

client implicitly requesting the payload. If payload data is

being sent and the remote version is more recent, the data is written

to a file.

Conficker's P2P client logic is

invoked once the peer discovery procedure produces a candidate

server. As discussed in

Section 5, peer

connections are established as a result of networking probes or by

revisiting servers whose addresses were previously saved within

the client's peerlist table.

In the case of the TCP client, each

newly established peer connection spawns the

tcp_client_connected

thread, presented in

Source

Listing 13. This thread is responsible for generating a new

ping message to the remote server. The ping message does not have

any payload data, and its purpose is to inform the server of the

client's current binary payload version. The server must then

decide the appropriate client response: 1) send the client its binary

payload, 2) request that the client forward its own binary

payload, or 3) confirm that both peers are synchronized on the

same payload.

Regardless of which decision the

server makes, the client prepares for this peer exchange by entering a

loop in which it will alternate from sending to receiving P2P messages,

until one of the following conditions is reached:

- - client_handle_recv

returns an empty buffer (result_out =

NULL).

- - WaitForSingleObject (a2) returns true.

- - call_recv

returns an error or empty message.

- - call_select_and_send

returns an error.

- - The number of messages sent exceeds 2000.

Each message received by the client

is processed via the client_handle_recv

function. This function waits for an incoming message on

the socket, and then validates whether the received message conforms to

the Conficker TCP protocol (i.e., the value of the first two bytes

corresponds to the remaining length of the message). This message

is then passed to the client_handle_recv

function that parses the message and composes an appropriate response.

In the case of UDP, the client scan

routine initiates communication by sending the first hello packet and

receiving the response. It then calls the

udp_checkIP_send_recv

function shown in

Source Listing 14.

The arguments to this function include the socket, a complex type and

flag indicating whether the communicating peer is local or

remote. The 40-byte second argument contains two sockaddrs, a

pointer to a buffer, and the buffer length. It begins by checking

if the peer address is a broadcast address or in one of the filtered

addresses. If the conditions are false, then it sends the buffer

to the peer (retrying as many as three times if necessary). It

then enters a finite loop of as many as 2000 messages where it receives

messages, parses them by calling

client_handle_recv

and sends replies using the

call_select_and_sendto

subroutine. If the send fails, it retries three times.

The

client_handle_recv

subroutine is shown in

Source Listing 15.

In many ways, this is similar to the

server_handle_recv

function. It also begins by parsing the received message using

the

parse_recvd_

packet function, which validates the message contents and

populates the

parsed_message

(i.e., structure

pm in the

source listing).

Like the server, the client is responsible for handling the three

possible outcomes that may arise when the binary payload version

comparison is performed with the server:

server payload version == client

payload version: the server's IP address

is added to the client's local

peerlist table,

and the function exits. Peerlist updating happens only from the

client handler. Peers do not update their peerlists when

operating in server mode.

server payload version < client

payload version: the client begins sending chunks of its

local binary pay-

load to the

server. The chunk size is 1024 to 4096 or 512 to 1024, depending

on whether the protocol is TCP or UDP, respectively. The function

generate_new_message

is utilized to pack each new message to the server.

server payload version > client

payload version: the client recognizes

that the server has immediately

initiated a binary

payload upload. The client enters a receive loop and reconstructs

the server's payload message. Once downloaded, the client

cryptographically validates that the binary payload is valid, and

proceeds to spawn this binary code segment, store the payload, and

update its local version information.

Section 8 explains how received

binaries are stored and managed.

As we have explored the P2P client

and server logic in the previous sections, the lack of a rich peer

commun- ication scheme becomes apparent. Peer messages are narrow

in functional scope, simply allowing a pairing of peers to synchronize

their hosts to the binary payload with the highest version ID.

However, while the protocol itself appears simple, further analysis

suggests that the Conficker authors have pushed most of the complexity

of their peer network into the logic that manages the digitally signed

payload.

There are important differences

between the binary distribution capabilities of Conficker's original

DGA Internet rendezvous scheme and this newly introduced peer

network. Here we highlight three of the more obvious areas

where these two binary distribution services differ:

1

|

Files

downloaded through the rendezvous points are in the Microsoft PE

executable format, and are destined for execution as processes that

reside independently from the running Conficker process.

Alternatively, P2P downloaded binaries represent code segments that are

destined to run as threads within the resident Conficker's virtual

memory space. This significant difference provides the Conficker

authors with great flexibility in managing and even reprogramming

elements of their bot infrastructure. |

2

|

A file

downloaded from the P2P mechanism contains a header that embeds

critical meta-data regarding the payload, such as its version, its

expiration date, and whether the payload has been encrypted multiple

times. A downloaded file also embeds extra bytes that can be any

data

associated with the payload code. It can consist entirely of data

bits, which are not meant to be executed, but rather stored on the

infected node and further distributed through the P2P protocol. |

3

|

The files

distributed through the P2P mechanism and the DGA mechanism

are both digitally signed with different RSA key of size 4096 bits

using the MD6 hashing function. |

The key subroutine to examine for

understanding how files are locally handled and then shared across the

P2P network is

rsa_validation_rc4_decryption.

This subroutine checks the signature of the digitally signed

payload, and takes the following arguments: the public exponent,

the modulus, the downloaded payload, its size and the appended

signature. The call graph in

Figure 4

describes how this function gets called from the set of P2P threads.

Figure

4: Binary payload validation call graph

Two primary paths are possible from

the client and server threads that process incoming messages from

peers. In one case, a single message is interpreted as an encrypted

code segment, which is spawned directly as a thread within the

Conficker process image. In the second case, the payload is

stored in a file and checked whether it is has been encrypted multiple

times. The payload is further checked to determine whether it is

code that needs to be spawned as a thread, or data that needs to be

stored in its encrypted form in the registry, and further distributed

within the peer network.

To understand how files are

downloaded and validated by the various threads of the P2P service, let

us first describe several key data structures that the P2P file

management subroutines manipulate:

- - A registry entry provides a persistent store for

capturing the contents of a downloaded payload

- - A separate registry entry stores the list of peers found to

possess the same binary version as the client

- - A global array of 64 elements allows Conficker's P2P service

to simultaneously downloaded as many as files

- - An array of IP addresses is used to identify scan targets

The non-persistent stores in the form

of arrays of multiple file downloads and peers IPs are used as

temporary data structures to keep track of the simultaneous download of

multiple files, depending on how many peers have a binary ready to

share. The two persistent stores are used to store a list of peers and

a digitally signed file.

The following structure is associated

with each downloaded payload from a remote peer. Conficker

maintains an array of 64 elements that support the potential of as many

as 64 simultaneous payload downloads. Once a download is achieved, the

information stored in this structure is passed to a subroutine that

decrypts the payload and eventually stores it in the registry, and then

spawns the decrypted payload code as a thread inside the Conficker

running process:

typedef

struct p2p_file_storage {

int32 is_populated;

int32 chunks;

int32 local_port;

int32 peer_IP;

int32 peer_port;

int32 local_IP;

int32 version;

int32 protocol;

int32 time_creation;

int32 last_time_written;

int32 FileHandle;

int2 nb_writtenbytes;

}

Below, we describe how both the

client and server threads manipulate the payload data received as a

result of the message exchange described in

Section

4, beginning with how the function

rsa_validation_rc4_decryption

is invoked.

Payloads exchanged through the P2P protocol are always subjected to an

RSA signature validation check and then decrypted using an RC4

decryption routine. Upon receiving the last packet from a peer, a

Conficker node creates a dedicated data structure we call

payload_check:

typedef

struct payload_check {

int32 size;

int32 decrypted_header[16];

int32 payload_encrypted;

int32 payload_decrypted;

}

The data structure size is 76 bytes.

The first four bytes contain the size of the received payload. The last

8 bytes contain pointers to two newly allocated heap spaces, where both

the received encrypted and digitally signed payload and its

corresponding encrypted payload are stored. Upon a successful digital

signature check and decryption, the first 64 bytes of the decrypted

payload content are copied to the header field of the

payload_check structure.

Upon examining the extracted header from the decrypted payload, further

actions are undertaken. Payload validation is wrapped within a

function that also extracts the P2P message header, called

check_payload_extract_header.

The source listing of this subroutine is shown in

Source Listing 16.

After decrypting the payload, the

function

check_payload_extract_header

populates the

decrypted_ header

field of the

payload_check

structure by copying the first 64 bytes of the decrypted payload. Based

on the analysis of the extracted header, further decryption of the

payload might occur. This is the case where the original payload

has been encrypted multiple times. Subroutine

secondary_payload_decrypt,

shown in

Source Listing

17, performs one further layer of decryption.

This function first clears the

initial encrypted payload stored in the payload_check structure,

and copies the decrypted payload minus the 64-byte header to the

encrypted field of the same payload_check

structure.

8.3 Payload Header Parsing

The payload header is a 64-byte

structure used to determine the embedded version of the payload to

check if it is compatible with the version sent as part of the P2P

message exchange. It includes an expiration date, which

enables the P2P service to disregard an obsolete version of the

distributed payload. The structure is described as follows:

- - The first double word represents an embedded version number.

- - The second double word represents an expiration date.

- - The third double word is used as a control word to indicate if

the payload should be executed or just stored

- as data in the registry.

- - The remaining 48 bytes are unused.

- - The fourth double word is used as a control word and has the

following values:

* 4: indicates

the presence of multiple layers of encryption and therefore requires

multiple calls to

the decryption routine

* 2: indicates that no further decryption is necessary

* 1: aborts decryption

8.4 Payload Execution

The decrypted valid payload received

from a peer is executed as a thread in the running Conficker C process

via subroutine

spawn_payload_thread.

The source implementation of this thread is shown in

Source Listing 18.

The function creates a thread that

executes the decrypted code and passes to the thread information about

a temporary heap-allocated memory space, the size of the code, the IP

from which it received the payload and the port through which it

received the payload, and the protocol used to deliver the payload. It

also sends a pointer to the array of obfuscated APIs initialized in the

setup phase of the P2P protocol and a pointer to the GetProcAddress

that can be used to load additional APIs.

8.5 Payload Execution and Storage

The Conficker P2P service considers

two types of executable payload code. The two types of payloads

have notable differences. The first difference consists of not

storing into the registry the first type of payload. This means

that those payloads can be interpreted as channels for command and

control, where payloads are sent in a single message. The second

difference consists of the parameters passed to the threads. The

first type of payload has access to the remote peer IP and port

when running, and therefore can further authenticate their origin at

runtime. They are never discarded by the receiving node unless

their version is obsolete. However, the version is not extracted from

the encrypted payload but rather from the sent message, which can be

easily faked (replayed). Payloads that can be encrypted multiple times

and may contain data or code do not have access to that information and

rely solely on the digital signature and version checks to be stored

and/or executed.

8.5.1 Execution with No Storage

The P2P server can spawn a binary

payload segment directly from a single message, without the need to

store this code in the registry first. This payload is spawned

directly as a thread. The thread is created with a list of parameters

that include the IP of the peer that sent the payload, the port from

which it was sent a pointer to the list of obfuscated APIs, and a

pointer to GetProcAddress

so that the thread can load more APIs, if needed.

In this way, threads can be delivered

to a resident Conficker process directly from the P2P channel, where

the only sources of the downloaded code logic reside in the resident

memory of the Conficker process or the encrypted P2P

message. This method of message-to-thread spawning is

implemented via subroutine

parse_recvd_

packet, and is shown in

Source

Listing 5.

The P2P server can also exchange

complex multi-encrypted payload structures, and then iteratively

decrypt each chunk. Upon decryption, the file expiration date on

the payload header is validated and version number is updated.

The decrypted payload could be code or data. If code, it is

spawned as a thread but with no parameters. This capability is provided

within the subroutine

iterative_payload_decrypt,

and is shown in

Source

Listing 19. The following subroutine decrypts the payload

that is received as multiple chunks and then checks for the presence of

layered encryption and signatures. It also includes the checks

for whether the payload is data or code that needs to be spawned as a

thread. In both cases, the extracted payload is stored in the

registry.

Conclusion

We have presented a reverse

engineering analysis of the Conficker C P2P service, described its

software architecture in detail, and provided a source-level

description of much of its key functionality. The Conficker C

binary used to conduct this analysis was harvested on 5 March 2009

(UTC), and has an MD5 checksum hash of

5e279ef7fcb58f841199e0ff55cdea8b.

Perhaps the best way to view the

Conficker P2P server is as a mechanism for propagating new executable

thread segments into the resident Conficker C processes of all infected

drones. Such threads not only allow the Conficker authors to

implement complex coordination logic via these threads, they may use

these threads to directly hot patch the resident Conficker C process

itself, and fundamentally change its behavior without requiring new

portable executable (PE) distributions and installations. In

fact, thread segments can be shipped throughout the peer network in a

mode in which they are executed but not stored on the local hard

drive. Thus, the only evidence of such code-level updates will

reside in the encrypted packet stream.

These capabilities are provided while

requiring reliance on the correct implementation of an extremely

minimal P2P protocol. The protocol appears specially crafted for

meeting the well known functional requirements of the Conficker C

network. Most of the significant functional complexity and

cryptographic protections that exist within the P2P service are located

in the binary download management logic. The effect of these

design decisions appears to be a minimal and robust network protocol,

which requires one to break the encryption protocols to succeed in

compromising the security of the P2P network.

As with our previous efforts to

understand Conficker, this report represents a snapshot of our

understanding of a few key aspects of Conficker's functionality.

We remain in direct communication with numerous groups that continue to

monitor this botnet and analyze its internal logic, along with many

other Internet malware threats. We

strongly encourage and appreciate

your feedback, questions, corrections, and additions. This report

is a living online document, and we will continue to update its content

as warranted and as new developments arise.

Acknowledgments

We thank David Dittrich from the

University of Washington, Aaron Adams from Symantec Corporation, Andre

Ludwig from Packet Spy, and Rhiannon Weaver from the

Software Engineering Institute at Carnegie Mellon, for their thoughtful

feedback. Also thanks to Vitaly Kamlyuk from Kaspersky Lab

for his help in verifying our mistakes. Most

importantly, thanks to Cliff Wang from the Army Research Office, Karl

Levitt from the National Science Foundation, Doug Maughan from the

Department of Homeland Security, and Ralph Wachter from the Office of

Naval Research, for their continued efforts to fund open INFOSEC research, when so few

other U.S. Government agencies are wiling or

able.

References

| [1] |

Computer Associates.

Win32/Conficker

teams up with Win32/Waladec.

http://community.ca.com/blogs/securityadvisor/archive/2009/04/15/win32-conficker-teams-up-with

-win32-waledac.aspx, 2009. |

| [2] |

Conficker Working Group. Home

Page. http://www.confickerworkinggroup.org,

2009. |

| [3]

|

CAIDA.

Conficker/Conflicker/Downadup as seen from the UCSD Network Telescope.

http://www.caida.org/research/security/ms08-067/conficker.xml,

2009. |

| [4] |

Vitaly Kamlyuk (Kaspersky

Laboratory).

Conficker C/E P2P Protocol. private document, 2009. |

[5]

|

Aaron Adams, Anthony Roe, and

Raymond

Ball (Symantec). W32.Downadup.C. DeepSight Threat Manage ment System,

Symantec Corporation. |

| [6] |

Phillip Porras, Hassen

Saidi, and Vinod Yegneswaran. An Analysis of Conficker’s Logic and

Rendezvous.

http://mtc.sri.com/Conficker/,

2009. |

| [7]

|

Phillip Porras, Hassen Saidi,

and

Vinod Yegneswaran. Conficker C Analysis. http://mtc.sri.com/Conficker/addendumC/,

2009. |

| [ 8] |

J. B.

Grizzard, V. Sharma, C. Nunnery, B. B.

Kang, and D. Dagon. Peer-to-peer botnets: overview and case

study. In

HotBots '07: Proceedings of the first conference on First Workshop on

Hot Topics in Understand ing Botnets, Berkeley, CA, USA, 2007. USENIX

Association. |

| [9]

|

SRI International. Conficker C

Actived P2P Scanner. http://mtc.sri.com/Conficker/contrib/scanner.html,

2009. |

| [10]

|

David Fifield. An Analysis of

Magic Table. http://www.bamsoftware.com/wiki/Nmap/PortSetGraphics#conficker,

2009. |

| [11] |

D. Dittrich

and S. Dietrich. P2P as botnet command and control: a

deeper insight. In Proceedings of the 3rd International Conference On

Malicious and Unwanted Software (Malware 2008). IEEE Computer Society,

October 2008. http://staff.washington.edu/dittrich/misc/malware08-dd-final.pdf |

| [12] |

D. Dittrich

and S. Dietrich. New directions in P2P malware. In

Proceedings of the 2008 IEEE Sarnoff Symposium, Princeton, New Jersey,

USA, April 2008.

http://staff.washington.edu/dittrich/misc/sarnoff08-dd.pdf |

Appendices

Appendix 1 Report Source Code

Listings

Appendix 2 The Conficker P2P Module Source Code

View the

Conficker

P2P source tree.

(100) = 0.0952.

(100) = 0.0952.